Articles - Page 3

30-Jun-2025

Introduction to Artificial Intelligence for Java Developers

A beginner-friendly guide to understanding artificial intelligence from the perspective of Java development. Learn how AI works, its key components, and how Java developers can start integrating AI into real-world applications.

22-Jun-2025

RAG Optimization Techniques: Proven Patterns, Anti-Patterns, and Practical Tips

Comprehensive guide to improving Retrieval-Augmented Generation (RAG) systems with actionable strategies, common mistakes to avoid, and expert best practices for robust AI applications.

15-Jun-2025

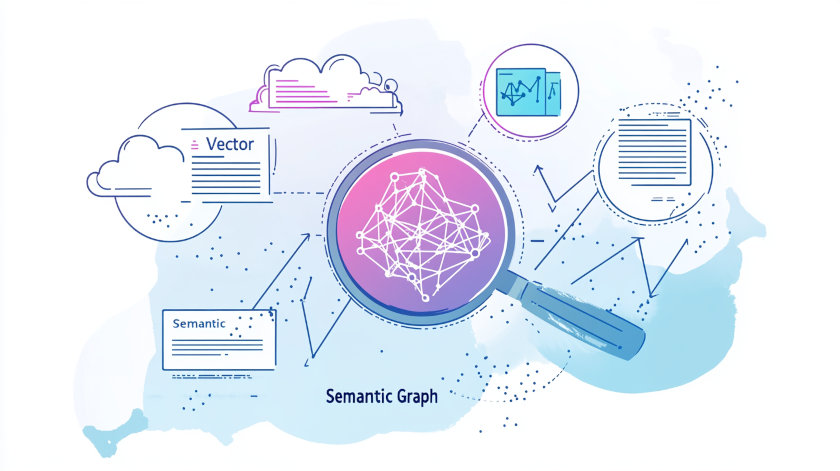

Unified AI Data Architecture for Legal Research: How PostgreSQL, PgVector, and Apache AGE Power Next-Gen Copilots

A detailed breakdown of the architecture behind the GraphRAG Legal Cases project using Azure Database for PostgreSQL, Apache AGE, and advanced AI/ML services. Learn how vector search, semantic ranking, and graph-based retrieval are combined to deliver high-quality legal research solutions at scale.

29-May-2025

Test results for local running LLMs using Ollama AMD Ryzen 7 8745HS

Local LLMs Benchmark data on GPU: AMD Ryzen 7 8745HS 16/64 Gb RAM